In this post, we’re going to use Delphix to create a virtual ASM diskgroup, and provision a clone of the virtual ASM diskgroup to a target system. I call it vASM, which is pronounced “vawesome.” Let’s make it happen.

Most viewers assume Gollum was talking about Shelob the giant spider here, but I have it on good authority that he was actually talking about Delphix. You see, Delphix (Data tamquam servitium in trinomial nomenclature) is the world’s most voracious datavore. Simply put, Delphix eats all the data.

Now friends of mine will tell you that I absolutely love to cook, and they actively make it a point to never talk to me about cooking because they know I’ll go on like Bubba in Forrest Gump and recite the million ways to make a slab of meat. But if there’s one thing I’ve learned from all my cooking, it’s that it’s fun to feed people who love to eat. With that in mind, I went searching for new recipes that Delphix might like and thought, “what better meal for a ravenous data muncher than an entire volume management system?”

vASM

In normal use, Delphix links to an Oracle database and ingests changes over time by using RMAN “incremental as of SCN” backups, archive logs, and online redo. This creates what we call a compressed, deduped timeline (called a Timeflow) that you can provision as one or more Virtual Databases (VDBs) from any points in time.

In normal use, Delphix links to an Oracle database and ingests changes over time by using RMAN “incremental as of SCN” backups, archive logs, and online redo. This creates what we call a compressed, deduped timeline (called a Timeflow) that you can provision as one or more Virtual Databases (VDBs) from any points in time.

However, Delphix has another interesting feature known as AppData, which allows you to link to and provision copies of flat files like unstructured files, scripts, software binaries, code repositories, etc. It uses rsync to build a Timeflow, and allows you to provision one or more vFiles from any points in time. But on top of that (and even cooler in my opinion), you have the ability to create “empty vFiles” which amounts to an empty directory on a system; except that the storage for the directory is served straight from Delphix. And it is this area that serves as an excellent home for ASM.

We’re going to create an ASM diskgroup using Delphix storage, and connect to it with Oracle’s dNFS protocol. Because the ASM storage lives completely on Delphix, it takes advantage of Delphix’s deduplication, compression, snapshots, and provisioning capabilities.

Some of you particularly meticulous (read: cynical) readers may wonder about running ASM over NFS, even with dNFS. I’d direct your attention to this excellent test by Yury Velikanov. Of course, your own testing is always recommended.

I built this with:

- A Virtual Private Cloud (VPC) in Amazon Web Services

- Redhat Enterprise Linux 6.5 Source and Target servers

- Oracle 11.2.0.4 Grid Infrastructure

- 11.2.0.4 Oracle Enterprise Edition

- Delphix Engine 4.2.4.0

- Alchemy

Making a vASM Diskgroup

Before we get started, let’s turn on dNFS while nothing is running. This is as simple as using the following commands on the GRID home:

[oracle@ip-172-31-0-61 lib]$ cd $ORACLE_HOME/rdbms/lib [oracle@ip-172-31-0-61 lib]$ pwd /u01/app/oracle/product/11.2.0/grid/rdbms/lib [oracle@ip-172-31-0-61 lib]$ make -f ins_rdbms.mk dnfs_on rm -f /u01/app/oracle/product/11.2.0/grid/lib/libodm11.so; cp /u01/app/oracle/product/11.2.0/grid/lib/libnfsodm11.so /u01/app/oracle/product/11.2.0/grid/lib/libodm11.so [oracle@ip-172-31-0-61 lib]$

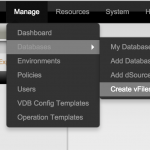

Now we can create the empty vFiles area in Delphix. This can be done through the Delphix command line interface, API, or through the GUI. It’s exceedingly simple to do, requiring only a server selection and a path.

Let’s check our Linux source environment and see the result:

[oracle@ip-172-31-0-61 lib]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 9.8G 5.0G 4.3G 54% /

tmpfs 7.8G 94M 7.7G 2% /dev/shm

/dev/xvdd 40G 15G 23G 39% /u01

172.31.7.233:/domain0/group-35/appdata_container-32/appdata_timeflow-44/datafile

76G 0 76G 0% /delphix/mnt

Now we’ll create a couple ASM disk files that we can add to an ASM diskgroup:

[oracle@ip-172-31-0-61 lib]$ cd /delphix/mnt [oracle@ip-172-31-0-61 mnt]$ truncate --size 20G disk1 [oracle@ip-172-31-0-61 mnt]$ truncate --size 20G disk2 [oracle@ip-172-31-0-61 mnt]$ ls -ltr total 1 -rw-r--r--. 1 oracle oinstall 21474836480 Aug 2 19:26 disk1 -rw-r--r--. 1 oracle oinstall 21474836480 Aug 2 19:26 disk2

Usually the “dd if=/dev/zero of=/path/to/file” command is used for this purpose, but I used the “truncate” command. This command quickly creates sparse files that are perfectly suitable.

And we’re ready! Time to create our first vASM diskgroup.

SQL> create diskgroup data 2 disk '/delphix/mnt/disk*'; Diskgroup created. SQL> select name, total_mb, free_mb from v$asm_diskgroup; NAME TOTAL_MB FREE_MB ------------------------------ ---------- ---------- DATA 40960 40858 SQL> select filename from v$dnfs_files; FILENAME -------------------------------------------------------------------------------- /delphix/mnt/disk1 /delphix/mnt/disk2

The diskgroup has been created, and we verified that it is using dNFS. But creating a diskgroup is only 1/4th the battle. Let’s create a database in it. I’ll start with the simplest of pfiles, making use of OMF to get the database up quickly.

[oracle@ip-172-31-0-61 ~]$ cat init.ora db_name=orcl db_create_file_dest=+DATA sga_target=4G diagnostic_dest='/u01/app/oracle'

And create the database:

SQL> startup nomount pfile='init.ora'; ORACLE instance started. Total System Global Area 4275781632 bytes Fixed Size 2260088 bytes Variable Size 838861704 bytes Database Buffers 3422552064 bytes Redo Buffers 12107776 bytes SQL> create database; Database created.

I’ve also run catalog.sql, catproc.sql, and pupbld.sql and created an SPFILE in ASM, but I’ll skip pasting those here for at least some semblance of brevity. You’re welcome. I also created a table called “TEST” that we’ll try to query after the next part.

Cloning our vASM Diskgroup

Let’s recap what we’ve done thus far:

- Created an empty vFiles area from Delphix on our Source server

- Created two 20GB “virtual” disk files with the truncate command

- Created a +DATA ASM diskgroup with the disks

- Created a database called “orcl” on the +DATA diskgroup

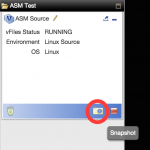

In sum, Delphix has eaten well. Now it’s time for Delphix to do what it does best, which is to provision virtual objects. In this case, we will snapshot the vFiles directory containing our vASM disks, and provision a clone of them to the target server. You can follow along with the gallery images below.

Here’s the vASM location on the target system:

[oracle@ip-172-31-2-237 ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 9.8G 4.1G 5.2G 44% /

tmpfs 7.8G 92M 7.7G 2% /dev/shm

/dev/xvdd 40G 15G 23G 39% /u01

172.31.7.233:/domain0/group-35/appdata_container-34/appdata_timeflow-46/datafile

76G 372M 75G 1% /delphix/mnt

Now we’re talking. Let’s bring up our vASM clone on the target system!

SQL> alter system set asm_diskstring = '/delphix/mnt/disk*'; System altered. SQL> alter diskgroup data mount; Diskgroup altered. SQL> select name, total_mb, free_mb from v$asm_diskgroup; NAME TOTAL_MB FREE_MB ------------------------------ ---------- ---------- DATA 40960 39436

But of course, we can’t stop there. Let’s crack it open and access the tasty “orcl” database locked inside. I copied over the “initorcl.ora” file from my source so it knows where to find the SPFILE in ASM. Let’s start it up and verify.

SQL> startup; ORACLE instance started. Total System Global Area 4275781632 bytes Fixed Size 2260088 bytes Variable Size 838861704 bytes Database Buffers 3422552064 bytes Redo Buffers 12107776 bytes Database mounted. Database opened. SQL> select name from v$datafile; NAME -------------------------------------------------------------------------------- +DATA/orcl/datafile/system.259.886707477 +DATA/orcl/datafile/sysaux.260.886707481 +DATA/orcl/datafile/sys_undots.261.886707485 SQL> select * from test; MESSAGE -------------------------------------------------------------------------------- WE DID IT!

As you can see, the database came online, the datafiles are located on our virtual ASM diskgroup, and the table I created prior to the clone operation came over with the database inside of ASM. I declare this recipe a resounding success.

Conclusion

A lot happened here. Such is the case with a good recipe. But in the end, my actions were deceptively simple:

- Create a vFiles area

- Create disk files and an ASM diskgroup inside of Delphix vFiles

- Create an Oracle database inside the ASM diskgroup

- Clone the Delphix vFiles to a target server

- Bring up vASM and the Oracle database on the target server

With this capability, it’s possible to do some pretty incredible things. We can provision multiple copies of one or more vASM diskgroups to as many systems as we please. What’s more, we can use Delphix’s data controls to rewind vASM diskgroups, refresh them from their source diskgroups, and even create vASM diskgroups from cloned vASM diskgroups. Delphix can also replicate vASM to other Delphix engines so you can provision in other datacenters or cloud platforms. And did I mention it works with RAC? vFiles can be mounted on multiple systems, a feature we use for multi-tier EBS provisioning projects.

But perhaps the best feature is that you can use Delphix’s vASM disks as a failgroup to a production ASM diskgroup. That means that your physical ASM diskgroups (using normal or high redundancy) can be mirrored via Oracle’s built in rebalancing to a vASM failgroup comprised of virtual disks from Delphix. In the event of a disk loss on your source environment, vASM will protect the diskgroup. And you can still provision a copy of the vASM diskgroup to another system and force mount for the same effect we saw earlier.

There is plenty more to play with and discover here. But we’ll save that for dessert. Delphix is hungry again.

Nice recipe, Steve. Seems a “side car” of production to a fail group in vASM would really penalize the writes since they would involve a mirrored write to the production-quality storage and a write to the vASM in the appliance. No? Maybe if both side of the “side car” are dNFS it might not matter so much? Thoughts?

Kevin,

The side car is something that I really want to dig deeper into, because it’s an incredibly elegant solution while also being “vulnerable” due to a symbiotic relationship with production storage. Delphix doesn’t preclude “production-quality storage,” provided fast enough disk distributed across multiple LUNs and a fast enough network (e.g. bonded 10GbpE), but the absence of a “preferred write failgroup” option in Oracle is enough to warrant further testing here.

Side-car has pros and cons. The pros are always easy to pick out…the con is a) taxing hosts with systems’level cost 100% increase in write payload with NORMAL redund and b) taxing checkpoints with writes that are only as fast as the slowest runner (same for sort spills, etc where applicable).

Also forgot to add that this side-car model also requires a much more complex fabric …but that assumes prod is not dNFS and the side-car is.

Just stuff to consider.

Would be nice to have a “preferred write fail group” option in the production instance but barring that the typical way to offload work from prod is to have the standby or data guard instance provide the writes to the virtual ASM for cloning purposes.